Motivation

While working through the Scala Principles class, the final unit had an example exercise that involved creating a "mnemonic" for a telephone number by mapping the number to an English "phrase". While a fun exercise, I was unsatisfied with the naive generation of mnemonic phrases. Yes, you could get "Scala is fun" for 7225247386, but you also get:

- sack air fun

- pack ah re t

- pack bird to

- Scala ire to

- rack ah re to

- pack air fun

- sack bird to

- rack bird to

- sack ah re to

- rack air fun

... which are decidedly less helpful. I thought that if I could implement even a simplistic language model, like a bigram model, to the options, and then rank them, I could make the output a little more useful. So I went looking for some bigram data, and eventually ran across a Wikipedia corpus on corpusdata.org. The second paragraph points out that prepping the data yourself is hard work and would require many hours, so paying them $295 for it is a steal. Challenge accepted mofos.

Setup

The first step is to download a Wikipedia dump file from dumps.wikimedia.org. I grabbed the file for March 1, 2019, which was a touch shy of 16GB compressed, and about 71GB expanded. Thank glob for that 1TB hard drive upgrade.

I set up my project files for IntelliJ by copy pasting one of the basic exercises from the Scala class, set up my GitHub repo, and started hacking away.

First pass

Initially I just poked around with the Scala APIs for streaming file io and XML Parsing. I remembered from some Java class years ago that Java had an "event" based XML API, and figured I'd need to use something similar to make this work since obviously I wasn't bringing all that text into memory (not unlike my .NET XML dabblings). Lo and behold Scala has an XMLEventReader class that produces a stream of XMLEvent.

I hacked together a quick loop over this stream of events and used pattern matching to try to filter out the gunk (there was plenty of it), but it didn't really work out too well. I ran it overnight a couple times, but it always seemed to stall once the output file got to about 5GB-ish. This output wasn't very "clean", as it still had quite a few artifacts of the MediaWiki format left in it. After futzing about at the margins with this for a bit trying to clean this up, I took a step back and decided to regroup.

Refactor

The biggest thing the code needed was just better modularity. All the code in the one object was trying to do everything, and it was a mess. High-level, what needed to happen:

- Extract whole "articles" from XML stream

- Remove all the MediaWiki tags and other garbage from the text

- Split the cleaned text into bigrams and count them

- Save the cleaned text to a separate file to allow further processing later

The first step was just breaking out the logic to extract a complete "article" from the XML stream. This was implemented as a helper method that was called any time a "text" start tag was encountered. I even managed to make it recursive so it didn't have to maintain mutable state! Go me, right? Well the first attempt was not tail recursive, and on the first run threw a stack overflow error. I added an accumulator to make it tail recursive, which fixed the memory issues.

Now that I could operate over a single blob of text in-memory, I could easily test the clean up logic in isolation. Nothing fancy, no MediaWiki parser (though such a thing does exist), just a bunch of regular expressions ORed together. This approach worked reasonably well to a point, but I did hit a snag with respect to nested tags. The nested tags were screwing with the regular expressions matches, so I had to write a helper method to do this directly (funny enough, validating balanced parens was actually an exercise in the Scala Principles class, ha!).

Once I have my chunk of sparkly clean text, I need to count the bigrams. A handy trick I found on StackOverflow (which of course now I can't find again) in order to get pairs of adjacent words is to zip the text with itself.drop(1):

val bigrams = tokens.zip(tokens.drop(1)).map(_._1 + " " + _._2)

Now, with these bigrams in hand, I initially tried to count them all up in one go, but it quickly became obvious that there was waaaaay too much data for that to work. I wanted to shard the data somehow so I could process it in chunks. The most obvious first choice is to divide it up alphabetically, however I suspected this would not be a very effective strategy because of the uneven distribution of words in the English language. I wanted a uniform distribution, so I thought it would make sense to use a fast hashing algorithm, and soon found Murmur3.

Murmur3 is highly performant and well behaved. The idea was to rewrite all the bigrams out uniformly across a number of files, and then count them within each file. Since any given bigram would always hash to the same value, I wouldn't have to worry about combining counts across files. I picked 100 as the shard count completely arbitrarily, and each file ended up around 500MB when fully processed. A second pass read these files one by one and accumulated the counts, which were then written out again, with the final count files being about 14MB-ish each.

After an article was cleaned and the bigrams written out, it was appeneded to a "clean" output file. Once all the XML and MediaWiki junk was stripped out, the 71GB of input produced about 22GB of output text.

Improving performance

While the processing logic was working correctly now, it took a painfully long time to process so much text on my aging laptop (I think this dinosaur is pushing 7 years now). A full run was taking ~18 hours. One thing I noticed, though, was that it didn't seem to fully utilize the available CPU during this time, saturating one while the other three cruised at 30% (they frequently switched which core was saturated, but it was only ever one at time really).

I thought I could probably improve processing time if I could make better use of the other cores. I suspected my CPU bottleneck was in the "cleaning" part of the process. Since this was basically just acting on a single blob of in-memory text, it was an ideal candidate to break out into it's own thread. I had to be careful, though, because I wanted any file writes to be done by only a single thread. I settled on a sort of poor-mans data pipeline:

- 1 thread per core dedicated to the cleaning

- 1 thread for writing to the "clean" text file

- 1 thread for writing to the 100 "bigram" files

Work was passed between threads using concurrent blocking queues in a sort of publisher-subscriber setup. I found the additional modularity of the logic appealing, and did a bit of println debugging to make sure everything was behaving well. Initially the bigram writing thread was a bottleneck, so I tweaked the logic to "batch" the writes, so instead of writing to disk for every single bigram, 300k of them (roughly 3k per file) would be queued up and written out all at once. Utilization was now much better:

I had a basic logging mechanism that would write a timestamp to stdout every couple thousand records:

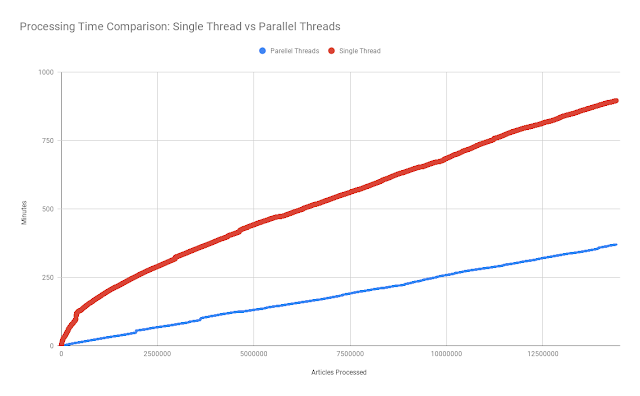

I dropped the data for a "before" run and data for an "after" run into a Google Sheet (which wasn't too happy about the thousands and thousand of rows haha). I managed to plot them on a scatter plot to get a nice comparative visualization. The data was quite telling; once fully "warmed up", the parallelized version enjoyed a 2x+ improvement over the previous iteration:

So, about that model...

I spent a lot of time dicking around with getting the data written out, and having done that I took a bit of a long break from this mini-project. However, I did get around to using the data for it's intended purpose. I created both a "unigram" or word frequency model, as well as the bigram model.

The unigram model actually proved surprisingly tractable. I just did a single pass through the "cleaned" text with a reader and counted up all the words using a mutable Map. It ran just fine with the 4GB default heap and finished relatively quickly.

The bigrams, on the other hand, were a different beast. I didn't think they would be too bad, since the total on disk was about 1.4GB. I mean, sure it's a chunk, but I had 16GB to play with, surely it couldn't be that bad, right? Well, with a 4GB heap, it threw an OutOfMemory error about a third of the way through.

I futzed around a bit with a "sharding" approach similar to how I created the intermediate files, thinking that perhaps the memory usage growth was worse than linear and maybe chunking up the in-memory data structures would improve the situation, but it didn't seem to help. I think the bigger difference had everything to do with increasing the Java heap size.

I used the -Xmx flag to increase the heap to 8GB, which still slowed to a crawl about 75% percent of the way through as (presumably) ever more frequent garbage collections frantically tried to scrounge up memory. I closed Chrome and everything else, bumped it to 10GB, and finally got it to load in about 13 minutes. One more GB (11) proved to be the difference maker, as the load time dropped down under 5 minutes (bumping to 12 didn't help because then swap got involved, and swap ruins everything it touches).

I didn't feel like re-implementing the original T9ish mnemonic generator, or importing it, so I just used some basic text to demonstrate the functionality. I ran "the quick brown fox jumped over the lazy dog" through both:

I wanted to do a quick sanity check of the model, so I went to the Google NGram Viewer to see what kind of result I would get. This is a very different corpus (books), and only up until 2008, but for something as timeless as "the quick brown fox jumped over the lazy dog", I figured it would be a decent enough validation:

Overall, the unigrams diverged a bit, while the bigrams were very similar. I'm gonna stick a fork in this one.

No comments:

Post a Comment