Exam Objectives

Implement one way, request/reply, streaming, and duplex communication; implement Azure Service Bus and Azure QueuesQuick Overview of Training Materials

Exam Ref 70-487 - Chapter 3.8

[MSDN] One-Way Service, How to create, One-Way

[MSDN] Request/Reply Service, How to create

[MSDN] Duplex Service, How to create

[MSDN] Streaming Message Transfer

[MSDN] Large Data and Streaming

[MSDN] Using Service Bus Queues with WCF

[MSDN-Blog] My Hello Azure Service Bus WCF Service

[MSDN] Azure Service Bus

[Blog] WCF Best Practices: One way is not always really one way

[PluralSight] WCF End-to-End - Operations

My post on Creating a Service

[MSDN] Request/Reply Service, How to create

[MSDN] Duplex Service, How to create

[MSDN] Streaming Message Transfer

[MSDN] Large Data and Streaming

[MSDN] Using Service Bus Queues with WCF

[MSDN-Blog] My Hello Azure Service Bus WCF Service

[MSDN] Azure Service Bus

[Blog] WCF Best Practices: One way is not always really one way

[PluralSight] WCF End-to-End - Operations

My post on Creating a Service

The Request/Reply pattern is basically the standard pattern we are all used to using with web based services. The client sends the request, that thread is either blocked or awaited (depending if the call was made synchronously or asynchronously), and the service returns a response (or a fault).

When you specify a service contract, this is the default pattern. The documentation for this pattern notes that you do not need to set IsOneWay to false, because that is the default value.

Pretty much all the demo code I've created for the WCF topics uses this pattern somewhere, so it hardly seems necessary to create another demo...

One Way Channels

Unlike Request/Reply, one-way operations are (as the name implies) do not get a reply from the service (beyond the requirements of the transport anyway). This is sometimes described as a "fire and forget" pattern, where the message from the client is written to the wire, but no reply is expected in return. While this intuitively implies that calls to one-way operations will always return immediately, due to the requirements of some underlying protocols, this is not always the case.

The Microsoft docs on One-Way include a lengthy note pointing out that HTTP is by definition a reply/response protocol. When a one way operations is invoked over an HTTP based binding, the service will return a 202 response as soon as the request is received (but before the request is processed). This can lead to the appearance of blocking calls when the service is a singleton instance, as the 202 for any subsequent calls is not sent until the previous request is finished processing.

In the WCF End-to-End course, the author points out that the presence of a transport session will cause a call to close the client proxy to block if there are still operations on the service that haven't finished. The presence of Message security and/or reliable sessions can lead to this kind of behavior, which is discussed in the corresponding best practices blog post.

In the service contract, there is a property on the Operation Contract called "IsOneWay" that must be set to true. The other requirement for one-way calls is that the methods must be "void"... which makes sense, being that nothing is returned from a one-way call (thank you, Capt. Obvious).

The Microsoft docs on One-Way include a lengthy note pointing out that HTTP is by definition a reply/response protocol. When a one way operations is invoked over an HTTP based binding, the service will return a 202 response as soon as the request is received (but before the request is processed). This can lead to the appearance of blocking calls when the service is a singleton instance, as the 202 for any subsequent calls is not sent until the previous request is finished processing.

In the WCF End-to-End course, the author points out that the presence of a transport session will cause a call to close the client proxy to block if there are still operations on the service that haven't finished. The presence of Message security and/or reliable sessions can lead to this kind of behavior, which is discussed in the corresponding best practices blog post.

In the service contract, there is a property on the Operation Contract called "IsOneWay" that must be set to true. The other requirement for one-way calls is that the methods must be "void"... which makes sense, being that nothing is returned from a one-way call (thank you, Capt. Obvious).

Duplex Channels

Duplex channels create, in effect, two one-way channels between the client and the service, which can be used simultaneously and independently of one another. I used this kind of channel for my chat service demo (which I created to demonstrate callback contracts). Because each side has to know how to communicate with the other, it is necessary to specify two contracts. One is the service contract, used by the client to communicate with the service. The other is the callback contract, which specifies how the service can communicate with the client. Both of these contracts use one-way operations:

[ServiceContract(Namespace = "http://failedturing.com/chatdemo")] public interface IChat { [OperationContract(IsOneWay = true)] void SendMessage(ChatMessage message); [OperationContract(IsOneWay = true)] void ChangeName(string name); } [ServiceContract(Namespace = "http://failedturing.com/chatdemo", CallbackContract = typeof(IChat))] public interface IChatManager { [OperationContract(IsOneWay = true)] void RegisterClient(string name); [OperationContract(IsOneWay = true)] void UnRegisterClient(string name); [OperationContract(IsOneWay = true)] void BroadcastMessage(ChatMessage message); }

The "IChat" service is the callback contract called by the service, and the "IChatManager" contract is what is called by the clients. As an illustration, when a client calls the "BroadcastMessage" method with "Hello!", the service will call the "SendMessage" method on all of the connected clients (we can make it skip the sender if we want).

While the process for the client to call the service is the same as any other service type, how does the service call the client back? This is where the CallbackChannel comes into play. In the chat service, when the client calls "RegisterClient", the callback channel for that client is stored in a dictionary. That way, when any client calls "BroadcastMessage", all the callback channels can be retrieved and "SendMessage" called.

IChat client = OperationContext.Current.GetCallbackChannel<IChat>(); if (!clients.ContainsKey(name)) { clients.Add(name, client); }

On the client side, one important distinction (versus simplex clients) is that the channel to the service must be created with the DuplexChannelFactory:

ChatService localService = new ChatService(); localService.Name = Console.ReadLine(); ; var dcf = new DuplexChannelFactory<IChatManager>(localService, "manager"); IChatManager manager = dcf.CreateChannel();

app.config:

<system.serviceModel> <client> <endpoint name="manager" address="http://localhost:8080/chatmgr/duplex" binding="wsDualHttpBinding" contract="ChatShared.IChatManager" /> </client> </system.serviceModel>

The client creates the callback service, then passes the instance of the callback service into the DuplexChannelFactory constructor. The DCF instance then creates a channel to the chat service itself. In this case, the channel binding is using wsDualHttpBinding.

Streaming

By default, messages in WCF are buffered, meaning the entire message is loading into memory before any processing is done on it. An alternative to buffered transfer is streaming transfer. The BasicHttpBinding, NetTcpBinding, and NetNamedPipeBinding support streaming out of the box; if these don't fit the scenario the only other alternative is a custom binding.

The use of streaming requires several tradeoffs. Certain WCF features require buffered messages in order to work: reliable messaging, transactions, message level security to name a few. Message level security requires the entire message be buffered so that it can be signed and/or encrypted (the entire message must be hashed as part of the process), therefor only transport level security is possible with streamed transfer. Reliable sessions require the message be buffered to enable re-transmission in the case of failures. The "Large Data and Streaming" article points out that the TCP and Named Pipes binding are inherently reliable, so are not really affected by these restrictions.

Turning on streaming is done at the binding level, using the "transferMode" attribute of the binding element. The values that can be used are "StreamingRequest", in which only requests are streamed, "StreamingRespose, which only streams responses, and "Streaming", which streams both. You could set it to "Buffered" as well, but this is the default value anyway so...

Another restriction on Streamed transfers, mentioned in the "Streaming Message Transfers" doc, is that streamed operations can only have one input and one output parameter (the return statement counts as an "out" parameter. It also mentions that the SOAP headers are always buffered, and subject to the MaxBufferSize transport quota.

I thought it would be interesting to demo streaming transfer, so I adapted one of my XML demos to use a stupid simple WCF service that streams file content (you pass the file path to the service). It's included in my GitHub repo. There isn't much to the contract or service implementation:

[ServiceContract] public interface IFileService { [OperationContract] Stream getFile(string filename); } public class FileService : IFileService { public Stream getFile(string filename) { Console.WriteLine("Requested file: " + filename); return new FileStream(filename, FileMode.Open); } }

The client side is pretty simple as well. After adding a service reference to the running file service, this code runs a "processing" step using an XmlReader, one which takes the direct file path, and the other which takes a Stream from the WCF service. The "processing" isn't all that interesting, just calling Read() until the end of the file is reached and taking a count (writing a "." to the console periodically):

private static string BIG = @"C:\XML\psd7003.xml"; public static void XmlReaderPerformanceTest() { using(var client = new FileServiceReference.FileServiceClient()) { var settings = new XmlReaderSettings(); settings.DtdProcessing = DtdProcessing.Parse; settings.ValidationType = ValidationType.DTD; settings.XmlResolver = new XmlUrlResolver(); Console.WriteLine("Processing started via local filesystem..."); var xr = XmlReader.Create(BIG, settings); ProcessReader(xr); Console.WriteLine("\n\nProcessing started via WCF file stream service..."); var xr_wcf = XmlReader.Create(client.getFile(BIG), settings); ProcessReader(xr_wcf); } }

I wanted a smoking gun that the service was running as a stream, so I set the "maxBufferSize" attribute on the binding pretty small on the service and client. The "maxReceivedMessageSize" attribute still applies, and threw an exception when I didn't crank it up big enough to accomodate the files I was shoving through the service (the "Protein Sequence Database" XML file is about 700MB). Here is the client side:

<system.serviceModel> <bindings> <basicHttpBinding> <binding name="BasicHttpBinding_IFileService" transferMode="StreamedResponse" maxBufferSize="100000" maxReceivedMessageSize="4000000000"/> </basicHttpBinding> </bindings> <client> <endpoint address="http://localhost:19999/file" binding="basicHttpBinding" bindingConfiguration="BasicHttpBinding_IFileService" contract="FileServiceReference.IFileService" name="BasicHttpBinding_IFileService" /> </client> </system.serviceModel>

The results were pretty cool. The WCF service was slower than the local stream, unsurprisingly, but it was definitely doing a streaming transfer. I did have to put the psd7003.dtd file in the same directory as the client executable... I'd changed the path since the original web address for the dtd was history (404ed out). Normal text editors choke on a 700MB text file, but EmEditor was able to get the job done, fortunately. Anyway, this the is result:

Azure Service Bus (Relay pattern)

Because creating an Azure Service Bus relay is covered in another topic, I'll focus briefly here on the patterns involved here. I found the explanation given in the "My Hello Azure Service Bus WCF Service", as well as the hands on examples, useful for understanding the basic idea of relays. The "Service Bus Fundamentals" document goes into greater depth on the design and applicability of relays and queues.

The service bus relay provides a mechanism for two way communication between a client application and a service using the relay as an intermediary. The killer app for this setup is allowing communication with services hosted on the internal network infrastructure without the need for creating holes in the firewall or mapping NAT traversal. As long as both the client and the service can access the internet (specifically, the namespace created for the Service Bus), then relay is possible.

Azure Queues

Unlike relays, which represent a communication pattern analogous to request-reply, queues enable one to decouple the timing from the processing of messages.

With queues, messages are sent one-way from the client to the Azure queue. The messages on the queue can then be read by a "consumer" and processed. With Service Bus Queues, reading messages off the queue can happen one of two ways. The first is a ReceiveAndDelete, which deletes the message from the queue (which means if it crashes while processing the message, the message is lost) or a PeekLock, which hides the message without deleting it. With the peek approach, if the processing application crashes, after a time the message will effectively be returned to the queue.

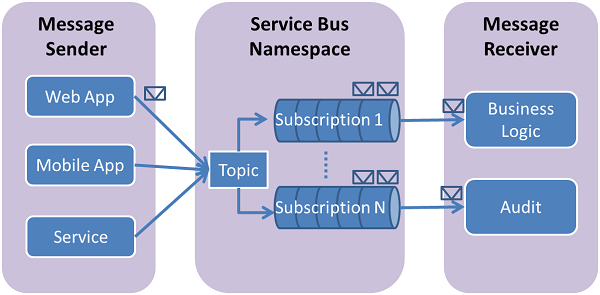

While it is possible to have multiple consuming services for a queue, each message only goes to any one processing service at a time. A variation on queues, called a Topic, allows the same message to be sent to multiple consumers if it meets the filtration criteria of those consumers. This pattern is also called "Publish/Subscribe" or pub/sub. This graphic from the documentation (ironically, for using Service Bus with Java...) illustrates this model:

So one "subscriber" reading a message with "ReceiveAndDelete" will not prevent other subscribers from seeing the same message, since each subscription gets it's own copy of each message.

No comments:

Post a Comment