Background

I'm using the Education Assistance project for this exercise, which I discussed in a previous posts on unit testing (parts 1, 2, 3, 4). I hadn't touched it in ... oh... probably 9 months, but it wasn't too different from how I left it. Pulled the repo, build, test... whoops failing tests in there. I didn't want to spend a lot of time on side issues so the tests that failed I just commented out. Still had 54 passing tests so I felt ok with it. (As an aside, I also managed to build it in my Jenkins environment... copied the MSBuild test job and made a few config changes, took maybe 10 minutes... I thought that was pretty cool... anyway...)

Creating a SQLite copy of SQL Server database

The first step was to create a SQLite database to work with. Fortunately, I found a conversion utility that made it pretty painless.

Setting up the SQLite project

The real work was in getting EF talking to the newly created SQLite database. The first tutorial I found didn't get me anywhere, since it counted on manually creating the DB context, which to me felt like it would have completely defeated the point. I mean, this is supposed to make my life easier, and manually coding or copy-pasting from the generated DB context for SQL Server seemed counter-productive. Fortunately, though, I was able to find a detailed step by step tutorial that would allow me to create an .edmx file and generate the models (foreshadowing: this kills my whole plan... ).

In order to create an .edmx file, you need the design time components for SQLite. This depends on which version of Visual Studio you are using, and the installer can be downloaded from the Sqlite downloads page. As the tutorial above so astutely points out, the page is a mess. This is what you want:

I'd include the direct link, but the next new version would break it anyway, and if you aren't using VS 2013 it wouldn't help you anyway. I didn't have to install the EF 6 Tools. With the design time components installed (and, I believe, Visual Studio restarted), you can add a connection to the SQLite database through Server Explorer, browse tables, execute queries, etc...:

The next step is to add the SQLite nuget package to the project. When I searched for "sqlite" in "Manage NuGet Packages", the top result was the one I needed. This will install Entity Framework as well, so this should be the only one you need to explicitly add (at least for our purposes):

The one thing this install does is neglect to include ALL of the necessary provider definitions in the app.config file. The following code needs to be added to the <system.data><DbProviderFactories> element:

<remove invariant="System.Data.SQLite" /> <add name="SQLite Data Provider" invariant="System.Data.SQLite" description=".NET Framework Data Provider for SQLite" type="System.Data.SQLite.SQLiteFactory, System.Data.SQLite" />

Without the modification, you'll get this exception:

That done, do a rebuild (vanilla build didn't seem to work). If you try to add the ADO Entity Model and get to the point in the wizard where you add the database connection, and SQLite isn't there, it's because the project needs rebuilt:

<REBUILD>

And this is basically where the tutorial left off. So now what?

Integrating SQLite with existing interfaces

My first thought was to simply copy over the SQLServer implementation of the interfaces over, change some class names with find/replace, and point the whole works at the newly created DbContext backed by SQLite. Easy right? I mean, that's why I used interfaces in the first place, so everything could be swapped out. Seemed to go according to plan except this one little hiccup:

"What the hell?!" ... so I'm baffled at first. I mean, the generated context has all the same entities, right? Why does the interface care? So I look at the interface:

It exact meaning of the error message dawned on me pretty much the moment I looked at the interface. Just because the data is basically the same, and even if we assume the generated classes are identical in structure (they aren't), strong typing comes into play here, and the classes are NOT the same. I thought about it for a moment, and decided to try changing the Sqlite repo and unit of work to use the "Models" namespace (and thus, the model classes generated for use with SQL Server)... and the DbContext as well (though more for convenience than functionality)... sigh, I read that now and realize what a monumentally stupid idea it was, but we'll get to why in a second.

Funny part is, this will all build just fine. So I think I'm good to go, oh I'm so clever...

It all falls down

Now it was time to write a unit test. That was, after all, the whole point of this exercise. So I pick an easy one that is just doing a select. I query the database through Server Explorer so I can get a user id to use with my FakeUser object, then I copy and tweak an existing test (side note, I converted the tests to NUnit from the Microsoft framework so they would work with my Jenkins set up... ):

[Test] public void ApplicationController_Index_Should_Return_Applications_For_Valid_User_SQLite() { var db = new SqliteUnitOfWork(); IPrincipal fakeUser = FakeUser.GetFakeUser("asdfasdfasdfasdf"); var controller = new ApplicationController(db, fakeUser); //Act var result = controller.Index() as ViewResult; //Assert //Each test user has two apps in the database, so there should be two results. var data = ((EnumerableQuery<Application>)result.ViewData.Model).ToList(); Assert.IsTrue(data.Count == 2); }

Once I fixed the errors caused by not having Entity or SQLite installed in the test project, I finally ended up with this error:

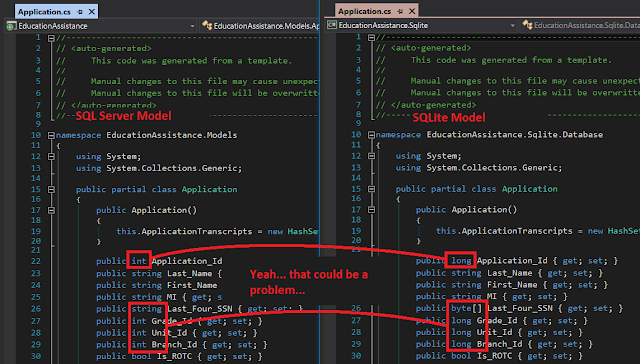

This error had me scratching my head. It read like a type mismatch, but had to do with the mapping. So I dug a little bit, comparing the generated models for SQLite to those generated for SQL Server:

So in classic fashion, I tried just changing the type of the Application_Id field on the SQLite model from long to int, but I was still getting the same error. So I went in search of the "eaModel.Application" business. I found it in the database model diagram. First run through, I think this was it, game over. Writing it up, I discovered that you can actually change the types through the properties. Wow, neat... lets try that, see what happens:

To my shock and amazement, it actually cleared out that error. Now granted, at this point I'm thinking "If I have to manually tweak the entire model, then this is still a bust..." Now the next one that failed was the Last_Four_SSN field, which is a string in the SQL Server model but a byte[] in the SQLite version. I had a feeling this wasn't going to be as work as nicely as the int - long switch, but I tried it anyway. I hate being right sometimes:

"Well, I wonder if I can change the type in SQLite, just for academic purposes"... aaaaand it turns out the answer is no. Modifying columns is not something you can do in SQLite, and the datatypes you can use are pretty course. The Last_Four_SSN is a varbinary in SQL Server, and evidently this maps to a blob in SQLite, which wants a byte[] in the entity model. Game over.

Conclusions

I think if one really, really, wanted to, they could make this work. For example, one could:

- write interfaces for all the models with accessors to all the relevant properties, and create concrete implementations that delegate to the generated classes and do the necessary type conversions. Course, I have to wonder how well this would play with EF, and it adds two extra layers of abstraction...

- carefully craft the types used in SQL Server so that they map nicely to the same types in SQLite. So bigint instead of int (so they both come out "long"). With this, though, you are letting testing concerns dictate the database design, and you would ultimately have to restrict yourself to the capabilities of SQLite.

Aside from the contortions you would have to work on your design to get it to work in some cases, there are some things that would be nearly impossible. For example, SQLite doesn't support stored procedures, so it would be useless for trying to run integration tests against functions that take advantage of that. While I'm a big fan of Linq, and trying to use it in lieu of sprocs whenever possible, that really isn't going to be a solution all the time. What if you inherit a database with all the sprocs already written (and required to be used)?

Using SQL Server compact edition really wouldn't be a better option as it too suffers from a severely diminished feature set compared to SQL Server. As I get to understand more about the nuances of testing, and explore new tools for automation, I think the answer is two fold:

- Unit tests shouldn't talk to any database. Business logic should be exercised with mocked data.

- Integration tests should use an environment as close to production as possible, so if you are using SQL Server for production, use it for testing. Run long running integration tests as part of an automated build pipeline.

No comments:

Post a Comment